How do GPU and CPU work together?

How do GPU and CPU work together?

Let’s clear the fuss with a simple statement: CPU is the boss and GPU is the peasant. Work is delivered to the GPU by the CPU. Or you may even say GPU is complimentary aid to CPU as it increases the overall performance and efficiency of your system. Let’s dive into the topic to know all about it how they work together and what makes them different.

Working together as a unit: CPU and GPU

As stated above they work together, so that your system can run multiple processes at a time. Initially, GPUs were introduced for rendering images and high graphics. But with the passage of time, as GPUs become more popular among the masses, they are now extensively used for speeding up the system. Especially if you are using systems for machine learning purposes, rendering 3d images and videos for high-end video games, or even handling a large amount of data GPU will be your ultimate savior.

But how do actually they work together as the CPU is responsible for controlling logical work while GPU works on SIMD (Single Instruction Multiple Data)?

- Basically, the CPU allocates tasks to GPU and lets it finish on its behalf.

- And then through synchronization calls, it keeps checks on GPU whether it is done with the task or at what state is in now the task.

To make communication possible between these two core components, drivers and software play a vital role and are ruled by the operating system. To make it understand better, see the below follow:

- Suppose you are working on rendering graphics through GPU, on the top level will be the application software you are working on it.

- The app will establish a connection with GPU

- Then the app with help of the interface sends decoding tasks to GPU hardware.

- Device drivers and OS work hand in hand to deliver the output to hardware.

Point to note: all of this phenomenon is actually happening on the CPU

Synchronization:

We presume now you have gotten the big picture, so move to the technical part. Where you have to place all the pile-up tasks for GPU named as frames. These frames proceed to GPU for decoding purposes. Firstly, that frame will be decoded as they depend on several dependencies: GPU-CPU-GPU. As the tasks are piled in queues, they will be decoded one by one. The next task cannot be initiated for decoding until the first one is done. Because the frame needs to be parsed or manipulated in the CPU to give instructions back to GPU to proceed. The device driver ensures to put the resumed one on wait sync. So that they do not perform any garbage operation while decoding is being done in the background. Once the CPU has sent back the information it signals the GPU to resume the flow.

Resource sharing among CPU and GPU creates dependencies. And CPU has to finish the frame before GPU has it because if got it in between the CPU is allocating resources GPU might receive the wrong resourcing shares. And if it receives before the CPU allocates resources, the GPU takes it as undefined resource sharing.

They create stalls between them, as dependent on the wait time to complete one frame and then move to the next. But as we discussed earlier, they work hand in hand to increase performance. Here the wait opposes the above statement. To overcome multiple instances are created to make them divide the work. As both CPU and GPU are processors, they can work on a pool of instances to handle multiple frames simultaneously. Consequently, the performance speeds up the system by avoiding stalls or obstacles in between them.

Difference between CPU and GPU:

As said earlier, GPU is complementary aid for the CPU. It can work with the CPU to increase efficiency but can never be a substitute for it. CPU handles the whole system and controls all the instructions going in and out of the system. It ensures everything runs smoothly. Whereas, GPU works on specific tasks such as graphics and calculations related. GPU does more work at a faster speed than CPU, it can handle more tasks because of multiple cores. Where the CPU has certain limits of tasks it can perform in a given time due to its limited cores. We can sum up as CPU a versatile unit and GPU as a faster unit.

FAQS:

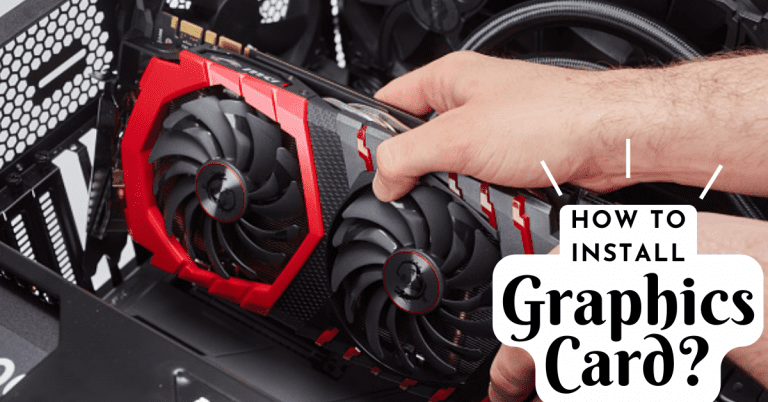

1. How do CPU, GPU, and SSD work together to build a good gaming PC?

Designing a good and fast gaming PC requires a powerful engine (CPU), a lightweight body (GPU), and efficient storage options (SSD). The CPU handles the system’s physics calculations, and a high-quality CPU with multiple cores operating at a high GHz can process data quickly. The GPU is responsible for sending pixels to the display, and a stand-alone GPU is preferable for gaming. The refresh rate, power supply, and slot space also need to be considered when choosing a GPU. The SSD, often the unsung hero of gaming PCs, helps to reduce latency and keeps games running smoothly. An SSD with a non-volatile memory express (NVMe) connection is the fastest, as it links directly to the computer’s PCIe lanes. The right combination of these elements in a gaming PC provides an immersive, speedy, and reliable gaming experience. Simply buying the most expensive option is not enough, as you’ll need to look for the best-in-class CPUs, GPUs, and SSDs.

2. Is a GPU capable of completing more work within the same timeframe as compared to a CPU?

It depends on what kind of work you’re talking about. If the work can be split into separate parts that can be done at the same time, a GPU might be faster. But if the work needs to be done in a certain order, a GPU might not be as good. It also depends on how good the GPU and CPU are, a newer and better GPU might do better than an old and not-so-good CPU.

Conclusion:

To summarize how GPU and CPU work together is that modern computers need to be able to handle a lot of information quickly. To have the best computer experience, it’s important to have both a CPU and GPU that work well together. This can help your computer run faster and better for gaming, work, or any other use.